Twitter

prepares the development of a new line of business based on the

exploitation of the data of its users, and I can not help but see the

reflection of innovation practices that the company has already become a

repetitive pattern: allow the development of an ecosystem of companies

that exploit characteristics of its operation, see which is the most

outstanding competitor , acquire it, and expel the others.

A

pattern that has been repeated with practically all the functions that

Twitter has been incorporating from ideas of the community of users or

companies that has been able to generate in every moment: for the

development of the app for each platform, for example, Twitter allowed

it to develop several in competition, finally acquiring one of them and

converting it into an official client. With the geolocalization, with

the inclusion of photographs, with the shortening of links, with the

insertion of video ... with many of the functions that we see today on

Twitter, the company has acted following the same strategy.

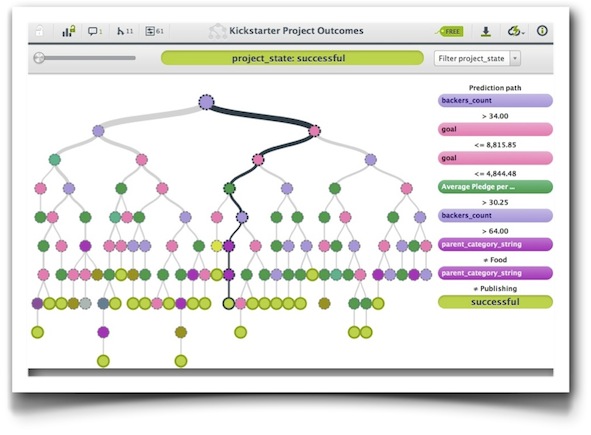

In

the present case, the pattern is repeated: For years, the company has

allowed to arising multiple companies dedicated to the analytics of

their data. Finally, in August last year, he acquired Gnip, one of the

competitors in that environment, for $134 million. After the operation,

the company has simply been leaving to up agreements with its previous

partners without renewing them: the last of these partners, DataSift,

will see their access to Twitter data disappear on August 13. And

finally announced that Gnip will be in charge exclusively for the

exploitation of these data, in what constitutes a proof of the reality

of big data as a business source.

So

far, all normal. Or in the case of Twitter, business as usual. What

this episode raises me, however, it is the sustainability of these

practices in the medium and long term: innovation on Twitter depends

largely on the progressive demands of its users and the way in which the

business community is looking for ways to satisfy them through products

that are built around the ecosystem generated by the company. On that

platform, the company performs a work of cherry-picking: Choose the best

option and acquire it. Some of these acquisitions have been completely

crucial in the company's future. From the point of view of innovation,

an impeccable practice that exploits its capacity to generate a

platform, an ecosystem that draws the attention of third parties. For

those who make the decision to develop activities on this platform, the

obvious finding of what a risky sport is: After creating and

consolidating your activity, or you are the chosen one and become part

of Twitter, which seems to be especially good at the time to raise

purchases without decapitalizing the company and retaining the majority

of its workers , or you only have a time until Twitter decides to throw

you and exploit that business itself.

Entrepreneurs

like Loïc LeMeur, who spent several years trying to develop products

around Twitter with seesmic and pivoting non-stop to adapt to that

changing platform, nothing new. But I do not think you have been eager

to try again in the same way. As a formula for innovation, the strategy

has definitely paid off. But could Twitter, in the future, find that it

alienated the base of developers and entrepreneurs to the point that, in

the face of these prospects for the future, companies that were willing

to gamble working on their ecosystem would not emerge?

This article is also available in English in my medium page, "Twitter and its approach to innovation"